The buzz around agentic AI is growing louder—and for good reason.

While traditional LLM apps respond to prompts, agentic systems think, act, and coordinate. They don’t just generate text—they generate behavior.

From AutoGen to LangGraph, and LangChain to CrewAI, a new era of design thinking is emerging around how we architect intelligent agents.

In software, patterns define the shape of thought. In agentic AI, design patterns define how intelligence unfolds over time.

Let’s explore the most common—and most promising—design patterns in Agentic AI, and what they reveal about the future of autonomous systems.

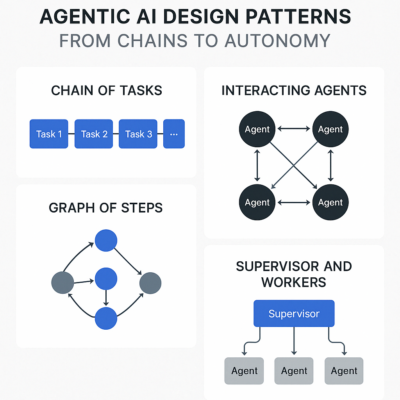

1. The Sequential Chain Pattern

“Do step 1, then step 2, then step 3.”

This is the simplest form of agent design, often built using tools like LangChain.

Example:

-

Task: Answer a user’s question about a document.

-

Steps:

-

Retrieve relevant document chunks.

-

Summarize them.

-

Format the output.

-

Pros:

-

Easy to implement.

-

Good for deterministic workflows.

-

Works well when the task is predictable.

Cons:

-

No room for adaptation.

-

Can’t handle unexpected results or decisions.

-

No internal feedback or learning loop.

Use this pattern when your task is linear, predictable, and the model doesn’t need to reflect or retry.

2. The Tool-Using Agent Pattern

“Call tools dynamically when needed.”

Inspired by ReAct and widely used in frameworks like LangChain and Transformers Agents, this pattern gives the agent the ability to:

-

Observe

-

Think

-

Act

-

Reflect

Example:

-

A research assistant agent decides to:

-

Search the web

-

Call a calculator API

-

Summarize findings

-

Pros:

-

Dynamic and flexible.

-

Great for agents that need real-world data or APIs.

-

Can evolve through new tool integrations.

Cons:

-

Can hallucinate tool usage if not tightly constrained.

-

Needs careful error handling.

Use this when you want LLM + Tool Use = Actionable Intelligence.

3. The Multi-Agent Collaboration Pattern

“Different agents, different roles—working together.”

Popularized by AutoGen, this pattern assigns specific roles to agents:

-

Coder Agent writes code

-

Critic Agent reviews output

-

Planner Agent breaks down tasks

These agents communicate in chat-like sessions until a goal is reached.

Example: Fix a bug in an app:

-

Coder proposes fix

-

Critic tests it

-

DevOps agent deploys it

Pros:

-

Mimics human team dynamics.

-

Enables division of labor and specialization.

-

Scales complex tasks through distributed thinking.

Cons:

-

Harder to debug.

-

Requires robust turn-taking logic.

-

Prone to infinite loops if poorly designed.

Use this when solving complex, multi-step problems that benefit from agent diversity.

4. The Reflective Loop Pattern

“Try → Evaluate → Retry → Improve”

This pattern emphasizes self-awareness within the agent. After every task, the agent:

-

Reflects on the result

-

Evaluates its success

-

Decides whether to retry or move on

Implemented well in:

-

LangGraph (via graph state transitions)

-

OpenAI’s function-calling + memory systems

Example:

-

AI agent writing an article:

-

Drafts a paragraph.

-

Reviews for quality.

-

Rewrites if clarity is poor.

-

Pros:

-

Encourages high-quality output.

-

Reduces hallucination and errors.

-

Brings an element of learning.

Cons:

-

Expensive in terms of tokens and time.

-

Needs well-tuned evaluation heuristics.

Use this when quality and accuracy matter more than speed.

5. The Graph-Based Planning Pattern

“Every state leads to multiple outcomes—choose wisely.”

Popularized by LangGraph, this pattern treats agents like state machines:

-

Each node is a task or decision

-

Arrows represent transitions based on output

Example:

-

An agent that reads logs, identifies bugs, writes a fix, tests it, and either:

-

Commits if successful

-

Retries if test fails

-

Asks for human help if blocked

-

Pros:

-

State is explicit and trackable.

-

Supports loops, branches, retries, dead-ends.

-

Makes planning visible and debuggable.

Cons:

-

Higher implementation complexity.

-

Needs robust state management.

Use this for long-running, goal-driven agents that need structured autonomy.

6. The Supervisor + Worker Agent Pattern

“One agent to rule them all.”

In this pattern:

-

A Supervisor Agent interprets user input

-

Delegates tasks to Worker Agents

-

Collects and synthesizes responses

Similar to:

-

CrewAI

-

LangChain agent routing

-

AutoGen’s GroupChat + UserProxy structure

Example:

-

You say: “Get me a report on product X’s performance last quarter.”

-

Supervisor:

-

Sends query to data agent

-

Sends request to writing agent

-

Combines everything for a response

-

Pros:

-

Task routing is clean and logical.

-

Enables scalable team-based architectures.

-

Reduces prompt complexity for each agent.

Cons:

-

Can bottleneck at the supervisor level.

-

Needs strong prompting to avoid overlap.

Use when you want a modular system with human-like task delegation.

7. The Modular Orchestration Pattern

“Agent behaviors as plug-and-play modules.”

Think of agents as microservices. You design them like LEGO blocks—each with a single responsibility. A central orchestrator manages execution.

Popular in:

-

CrewAI

-

Custom LangGraph + LangChain pipelines

-

Event-driven systems (e.g., FastAPI + Celery)

Pros:

-

High reusability and testability.

-

Works well with microservice architectures.

-

Great for enterprise-grade pipelines.

Cons:

-

Integration overhead.

-

More engineering than prompt design.

Use this when building enterprise-level GenAI platforms.

The Future: Hybrid Patterns

Most real-world systems will blend these patterns.

Example:

-

A Supervisor routes to a graph-based planner

-

Which uses multi-agent loops with tool-using agents

-

With memory and reflection at every step

These hybrid models are the future of Agentic AI-as-infrastructure.