In the beginning, there was the prompt.

A beautifully simple line of text—crafted by humans, interpreted by machines. We marveled at how language models like GPT could write essays, generate code, and even simulate personalities. But beneath the surface of prompt engineering, something was always missing: structure, memory, and control.

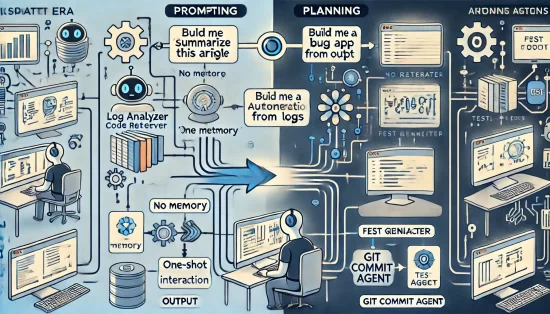

As we pushed the boundaries of what AI could do, we realized a single prompt was never enough. The future needed something more intelligent, more autonomous, more… agentic. That’s when the shift happened—from prompting to planning.

The Prompt Era: Power in Simplicity

Prompting, as it emerged, was both an art and a science. Engineers, designers, and even marketers learned to “whisper” to models using cleverly crafted sentences. With zero-shot or few-shot prompting, you could generate powerful results—code snippets, business strategies, summaries, poetry.

But this simplicity came with limitations:

-

No contextual memory beyond a single session.

-

No goal-oriented behavior.

-

No structured workflows.

-

No ability to self-correct or loop.

We were hacking intelligence without strategy. Prompts were powerful tools, but they were reactive, not proactive.

The Shift: Why Prompting Alone Wasn’t Enough

As enterprises began integrating LLMs into critical systems, they encountered real-world constraints:

-

Multi-step tasks couldn’t be executed reliably.

-

Data retrieval, decision-making, and error correction required more than one-shot generation.

-

Most importantly, long-term planning and execution were impossible without a framework.

We were building AI apps with the same method we used to test them: with ad-hoc prompting. The process was fragile and unscalable.

And then came the breakthrough.

Planning: The Core of Agentic Intelligence

Agentic AI reframed the conversation. Instead of a single model reacting to a single prompt, agentic systems operate like teams of experts working toward a common objective. Planning became the backbone—allowing agents to:

✅ Break down goals into sub-tasks

✅ Delegate work between specialized agents

✅ Retain memory and update state

✅ Monitor progress and self-correct

Frameworks like LangGraph, CrewAI, Autogen, and OpenAgents gave us tools to implement agent workflows—turning passive AI into active problem solvers.

We moved from prompting to planning pipelines.

Planning in Action: What It Looks Like

Let’s say you want to build an agent that debugs code from logs. A prompt-based approach might ask an LLM:

“Here’s the error log. What’s wrong?”

You’d get an answer, but not much else.

A planning-based system, on the other hand, would:

-

Ingest the logs using a log parser agent.

-

Identify error patterns using a semantic classifier.

-

Fetch relevant code using a vector search.

-

Generate a fix via a code generator agent.

-

Test the fix with a simulation agent.

-

Commit changes to Git with a version control agent.

Each step is handled by a specialized agent with clear objectives and shared memory. This isn’t just AI assistance—it’s AI collaboration.

How We Got There: Lessons from the Field

In our journey building agentic workflows, we learned a few key lessons:

1. Prompting Is a Skill; Planning Is a System

Prompting remains critical at the micro level—within agents—but macro success comes from structuring those agents into reliable systems. Think prompt → task → workflow → goal.

2. Memory Is the Game Changer

Short-term memory (conversation history) and long-term memory (knowledge bases, embeddings) are what elevate agents from reactive to reflective. Without memory, there is no planning.

3. Specialization Wins

Monolithic LLMs are like Swiss army knives. Agentic systems, on the other hand, are like orchestras—each component focused on its strength, coordinated for harmony.

4. Human-in-the-Loop Is Still Essential

Autonomous agents are powerful, but a strategic human touch ensures they align with business context, ethics, and domain knowledge. We are not replacing humans—we are amplifying them.

What’s Next? From Planning to Autonomy

The planning revolution is still in its early days.

Soon, agents won’t just follow workflows—they’ll design them. Using meta-cognition and reflective loops, agents will debug themselves, propose strategies, and improve with use.

Imagine systems where:

-

AI agents learn company-specific policies.

-

Development agents commit code with zero bugs.

-

Customer support agents understand user history before the chat even starts.

We are headed toward a future where AI plans, adapts, and grows—like humans do.

Conclusion: The Rise of the AI Architect

If prompts were the tools of AI whisperers, then planning is the blueprint of AI architects.

We no longer talk to AI—we build with AI.

As developers, researchers, and builders, we’re not just crafting words. We’re designing intelligence. From one-shot prompts to dynamic, multi-agent systems—we’ve unlocked a new frontier in human-machine collaboration.

And this is just the beginning.